I'm an undergrad at UC Berkeley advised by Sergey Levine and Aviral Kumar. I'm interested in building systems that can efficiently learn general skills.

News

- Sep 2025: New blog post summarizing our two papers on scaling laws for RL with value functions and discussing open problems.

- Sep 2025: New paper on compute-optimal scaling for value-based RL is accepted to NeurIPS!

- May 2025: I'm an Accel Scholar!

- May 2025: New paper on scaling laws for value-based RL is accepted to ICML!

Research

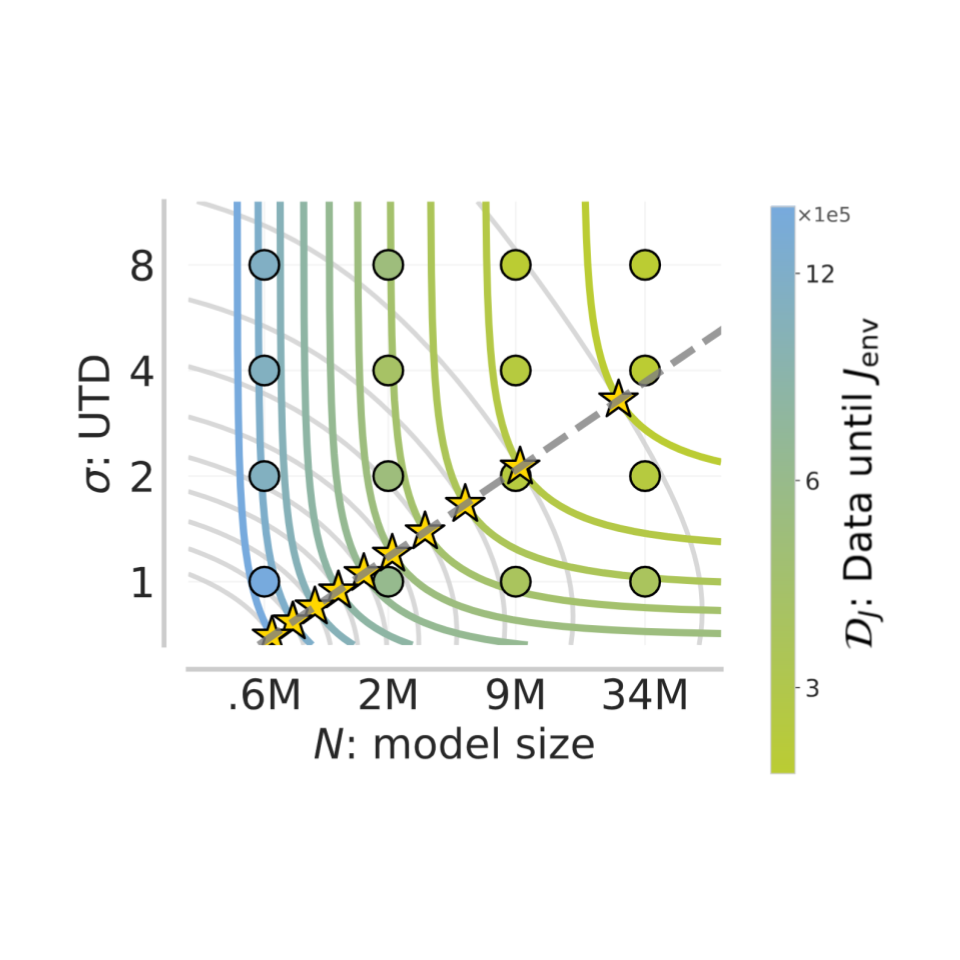

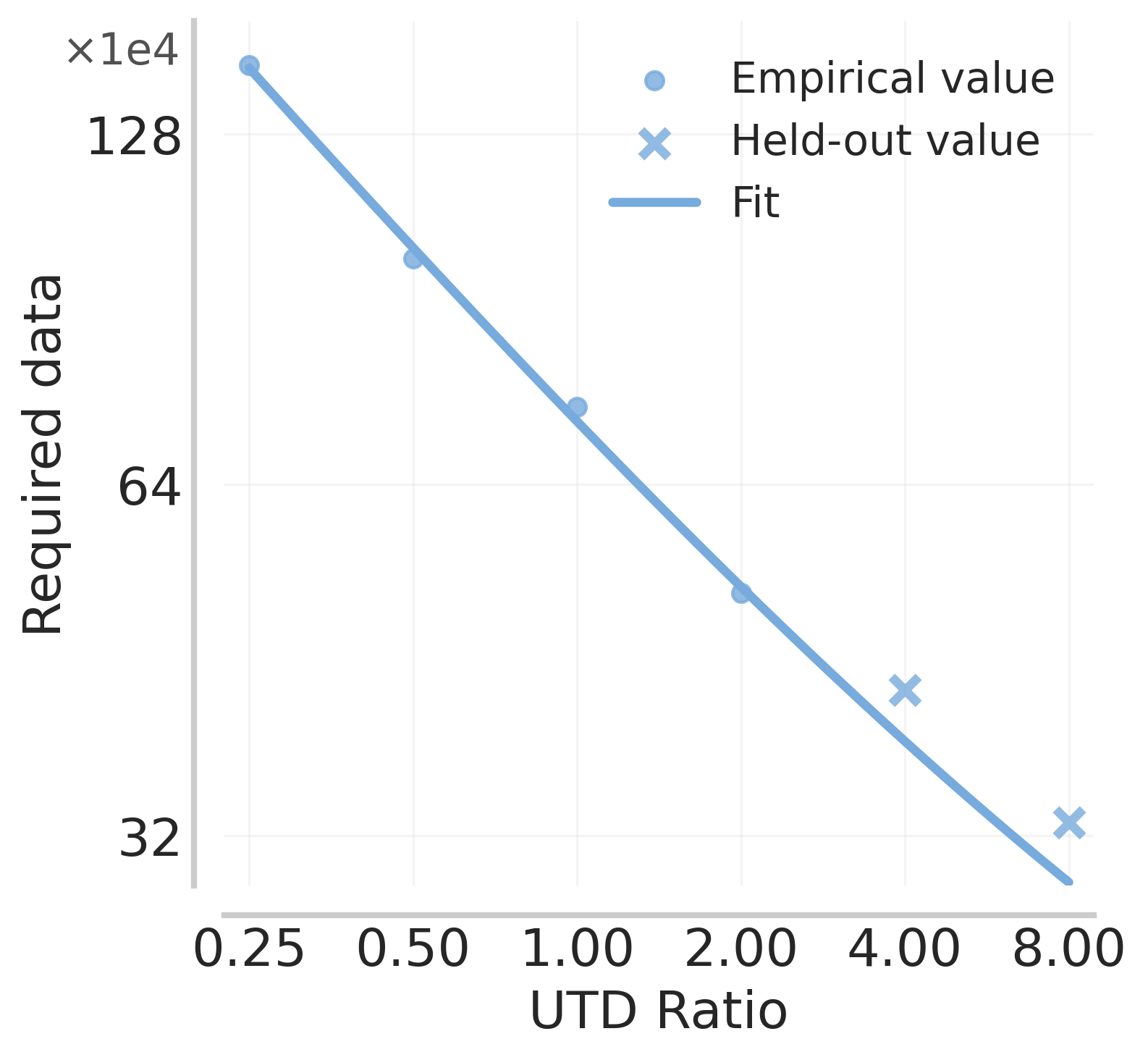

Compute-Optimal Scaling for Value-Based Deep RL

Preston Fu*, Oleh Rybkin*, Zhiyuan Zhou, Michal Nauman, Pieter Abbeel, Sergey Levine, Aviral Kumar

NeurIPS, 2025

Value-Based Deep RL Scales Predictably

Oleh Rybkin, Michal Nauman, Preston Fu, Charlie Snell, Pieter Abbeel, Sergey Levine, Aviral Kumar

ICML, 2025

ICLR Robot Learning Workshop, 2025 (oral)

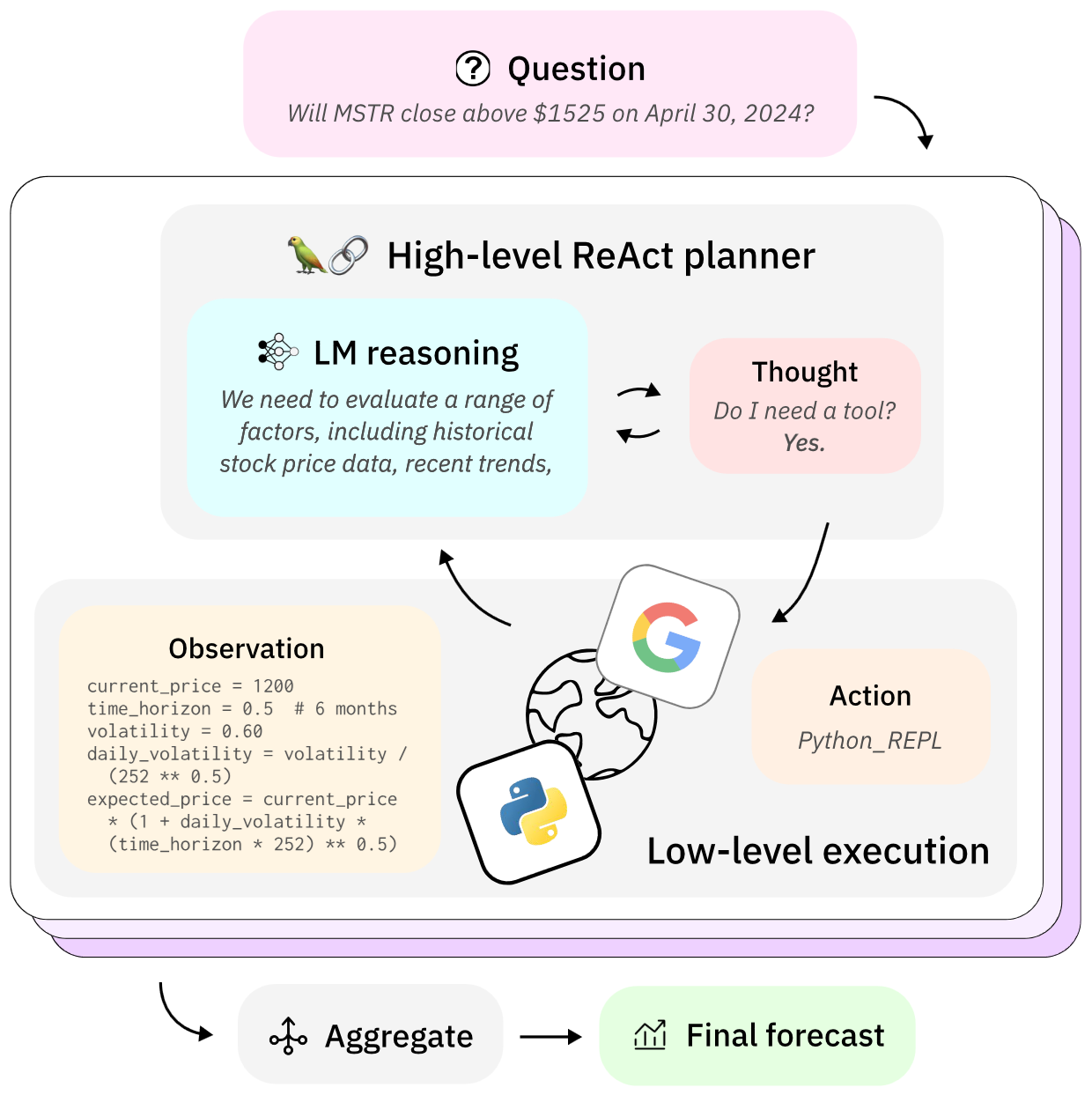

Reasoning and Tools for Human-Level Forecasting

Elvis Hsieh*, Preston Fu*, Jonathan Chen*

NeurIPS Math-AI Workshop, 2024

EMNLP Future Event Detection Workshop, 2024 (oral)